DESIGN METHODS PART I Jacob Campbell, Ph.D. LICSW at Heritage University SOWK 460w Spring 2024 Types of group designs

Slide 1

Slide 2

AGENDA ➤ Developing your research question ➤ Peer Review Logic Models ➤ Key components for evaluation methods ➤ Threats to validity ➤ Types of group designs Jacob Campbell, Ph.D. LICSW at Heritage University SOWK 460w Spring 2024

Slide 3

RESEARCH QUESTION What do you want to know? Jacob Campbell, Ph.D. LICSW at Heritage University SOWK 460w Spring 2024

Slide 4

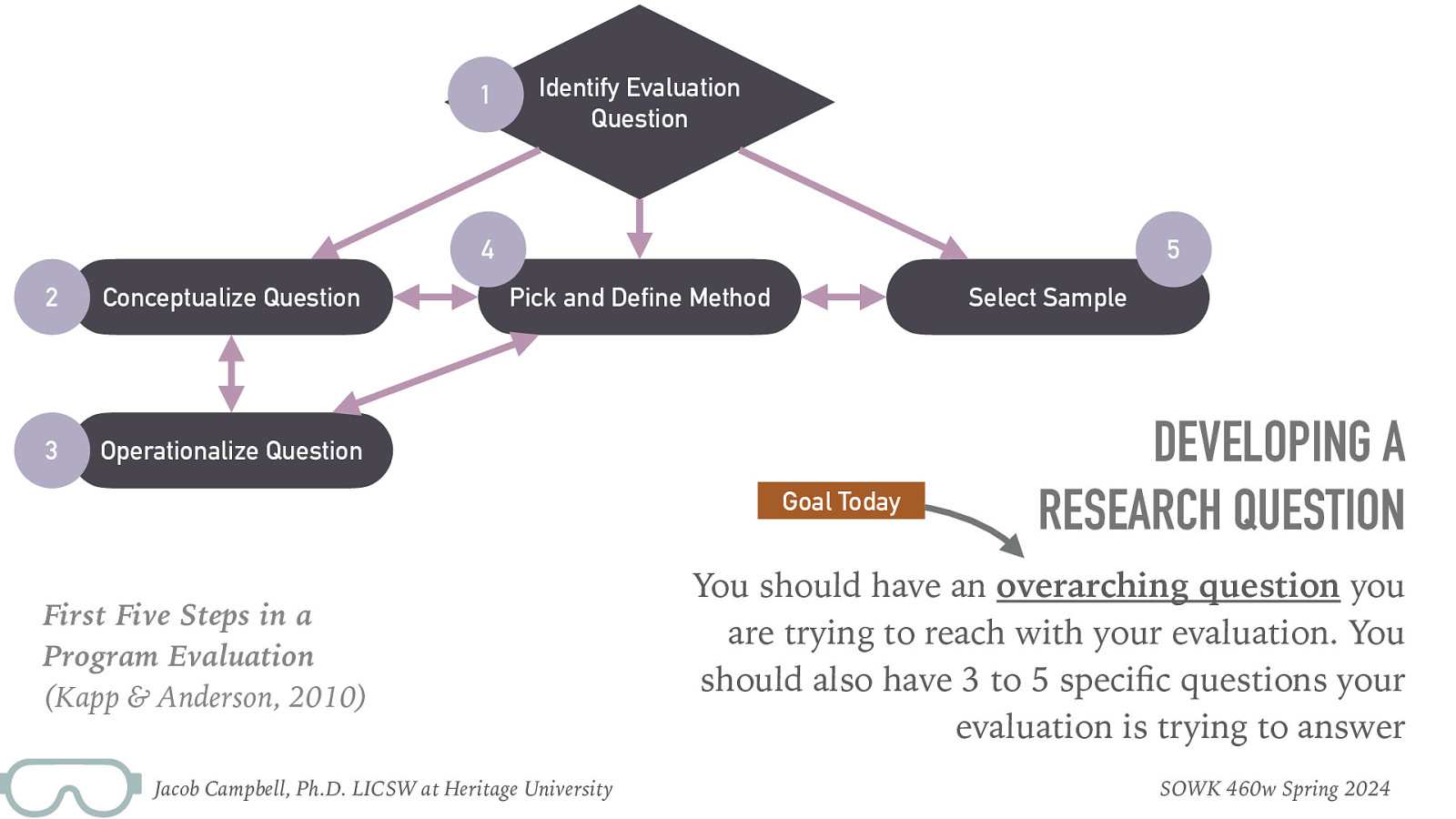

1 Identify Evaluation Question 4 2 Conceptualize Question 3 Operationalize Question 5 Pick and Define Method Select Sample Goal Today DEVELOPING A RESEARCH QUESTION You should have an overarching question you are trying to reach with your evaluation. You should also have 3 to 5 speci c questions your evaluation is trying to answer First Five Steps in a Program Evaluation (Kapp & Anderson, 2010) fi Jacob Campbell, Ph.D. LICSW at Heritage University SOWK 460w Spring 2024

Slide 5

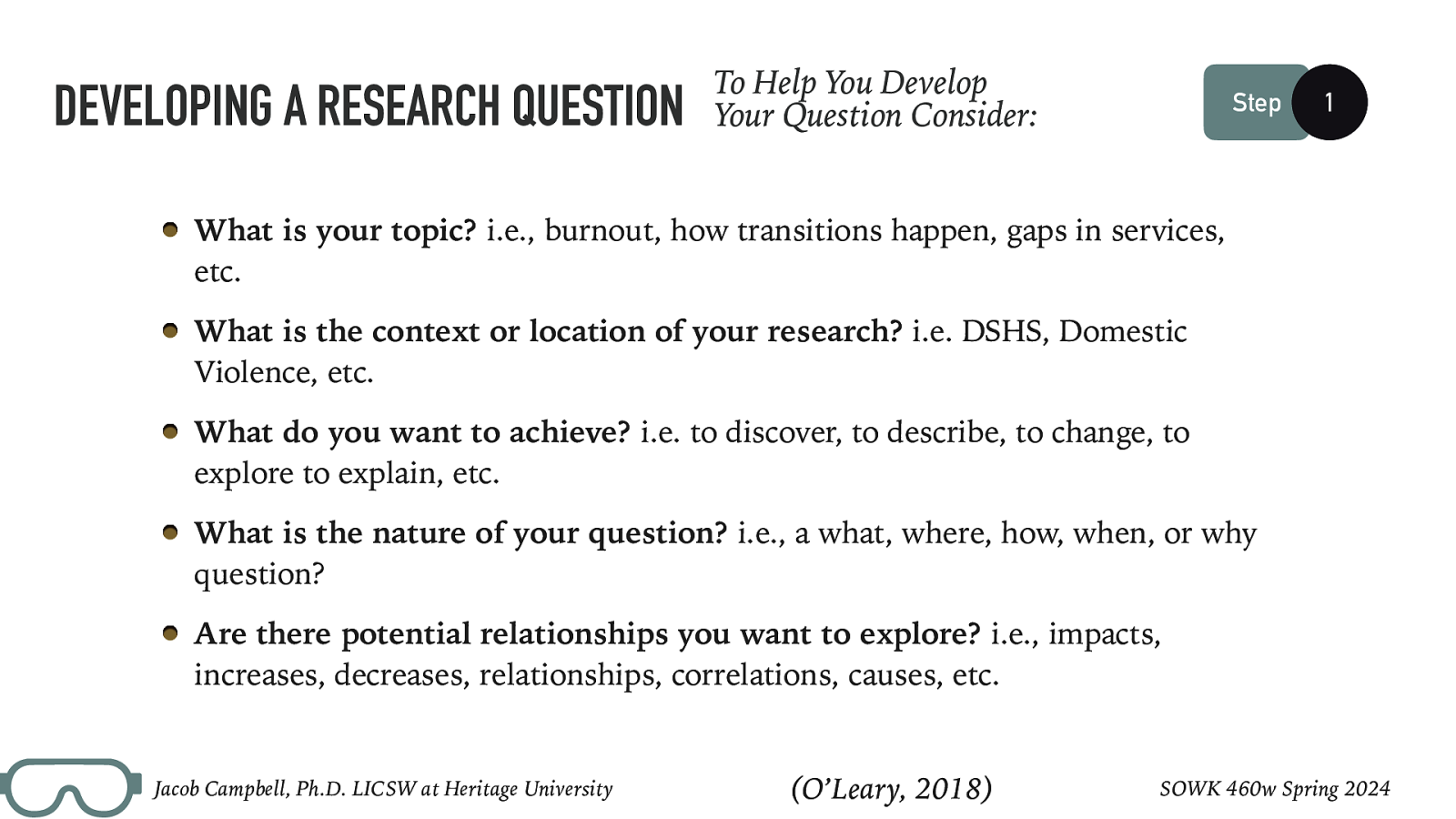

DEVELOPING A RESEARCH QUESTION To Help You Develop Your Question Consider: Step 1 What is your topic? i.e., burnout, how transitions happen, gaps in services, etc. What is the context or location of your research? i.e. DSHS, Domestic Violence, etc. What do you want to achieve? i.e. to discover, to describe, to change, to explore to explain, etc. What is the nature of your question? i.e., a what, where, how, when, or why question? Are there potential relationships you want to explore? i.e., impacts, increases, decreases, relationships, correlations, causes, etc. Jacob Campbell, Ph.D. LICSW at Heritage University (O’Leary, 2018) SOWK 460w Spring 2024

Slide 6

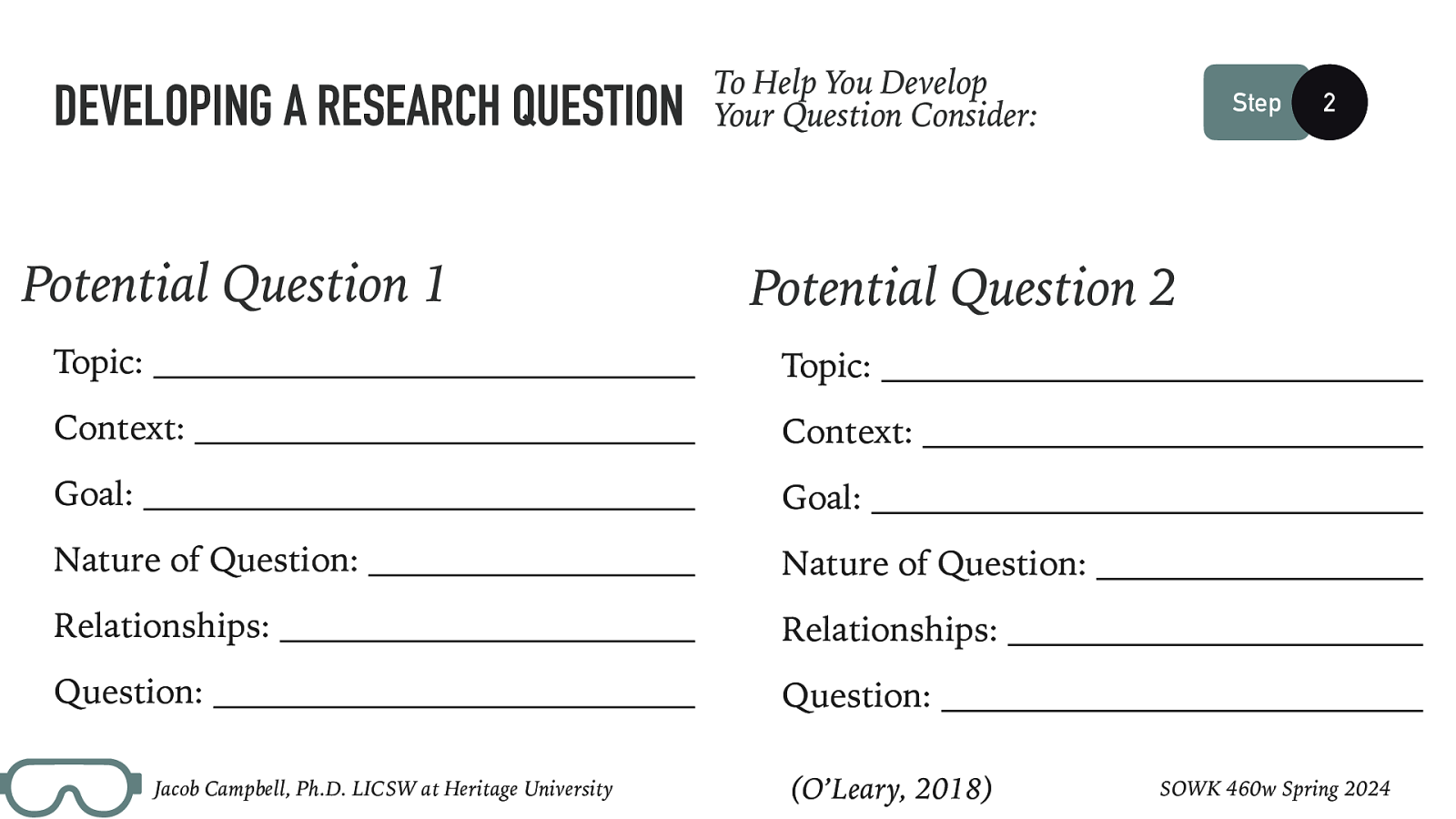

DEVELOPING A RESEARCH QUESTION Potential Question 1 To Help You Develop Your Question Consider: 2 Potential Question 2 Topic: Topic: Context: Context: Goal: Goal: Nature of Question: Nature of Question: Relationships: Relationships: Question: Question: Jacob Campbell, Ph.D. LICSW at Heritage University Step (O’Leary, 2018) SOWK 460w Spring 2024

Slide 7

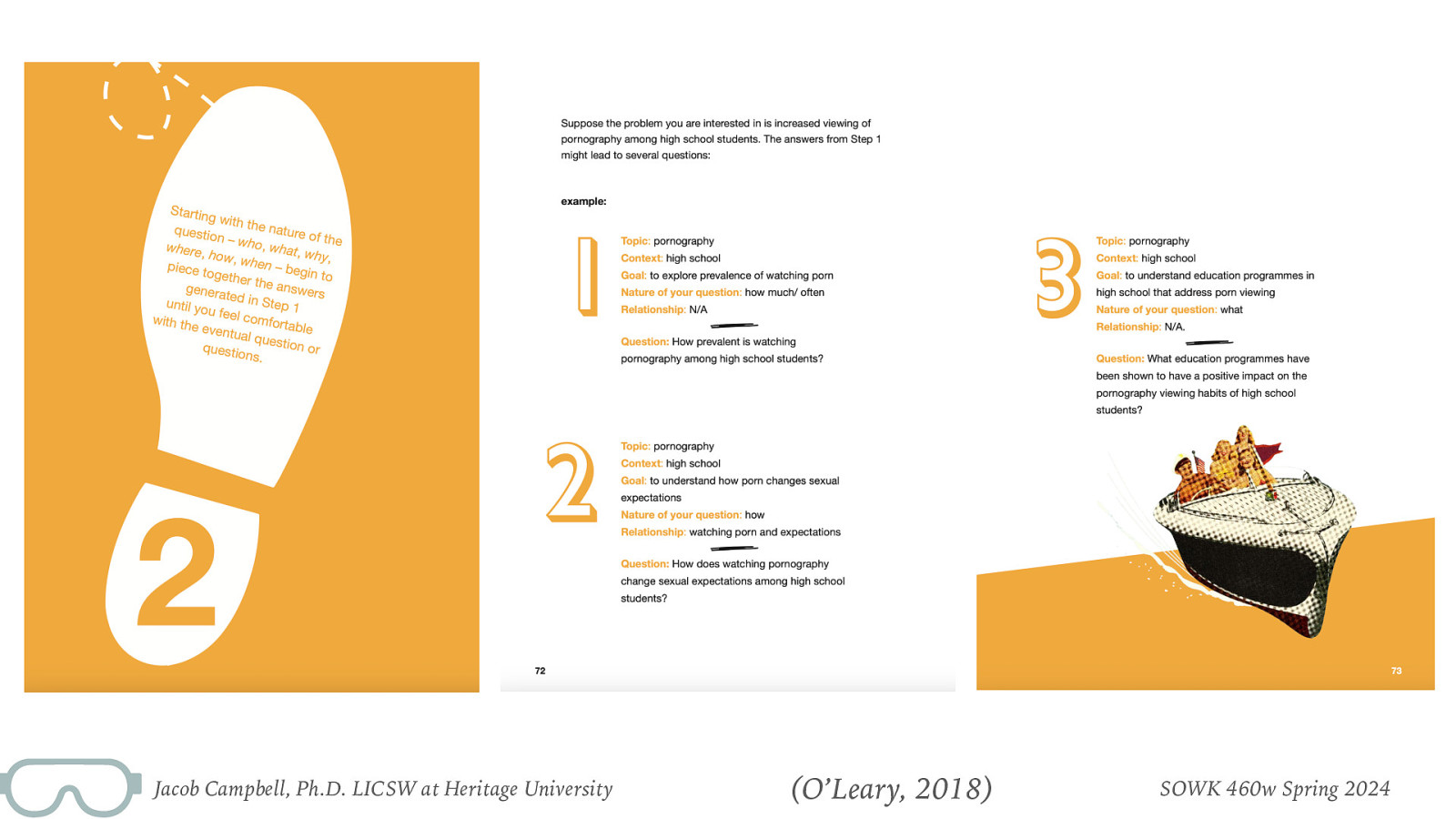

Jacob Campbell, Ph.D. LICSW at Heritage University (O’Leary, 2018) SOWK 460w Spring 2024

Slide 8

DEVELOPING A RESEARCH QUESTION To Help You Develop Your Question Consider: Step 3 DRAFT A QUESTION… Jacob Campbell, Ph.D. LICSW at Heritage University (O’Leary, 2018) SOWK 460w Spring 2024

Slide 9

DEVELOPING A RESEARCH QUESTION To Help You Develop Your Question Consider: Step 4

- Rewrite your question and circle terms that could be ambiguous. 2. Go through and clarify those terms. 3. Then, redraft your question, bringing more clarity and description Jacob Campbell, Ph.D. LICSW at Heritage University (O’Leary, 2018) SOWK 460w Spring 2024

Slide 10

LOGIC MODEL Overview of Program Jacob Campbell, Ph.D. LICSW at Heritage University SOWK 460w Spring 2024

Slide 11

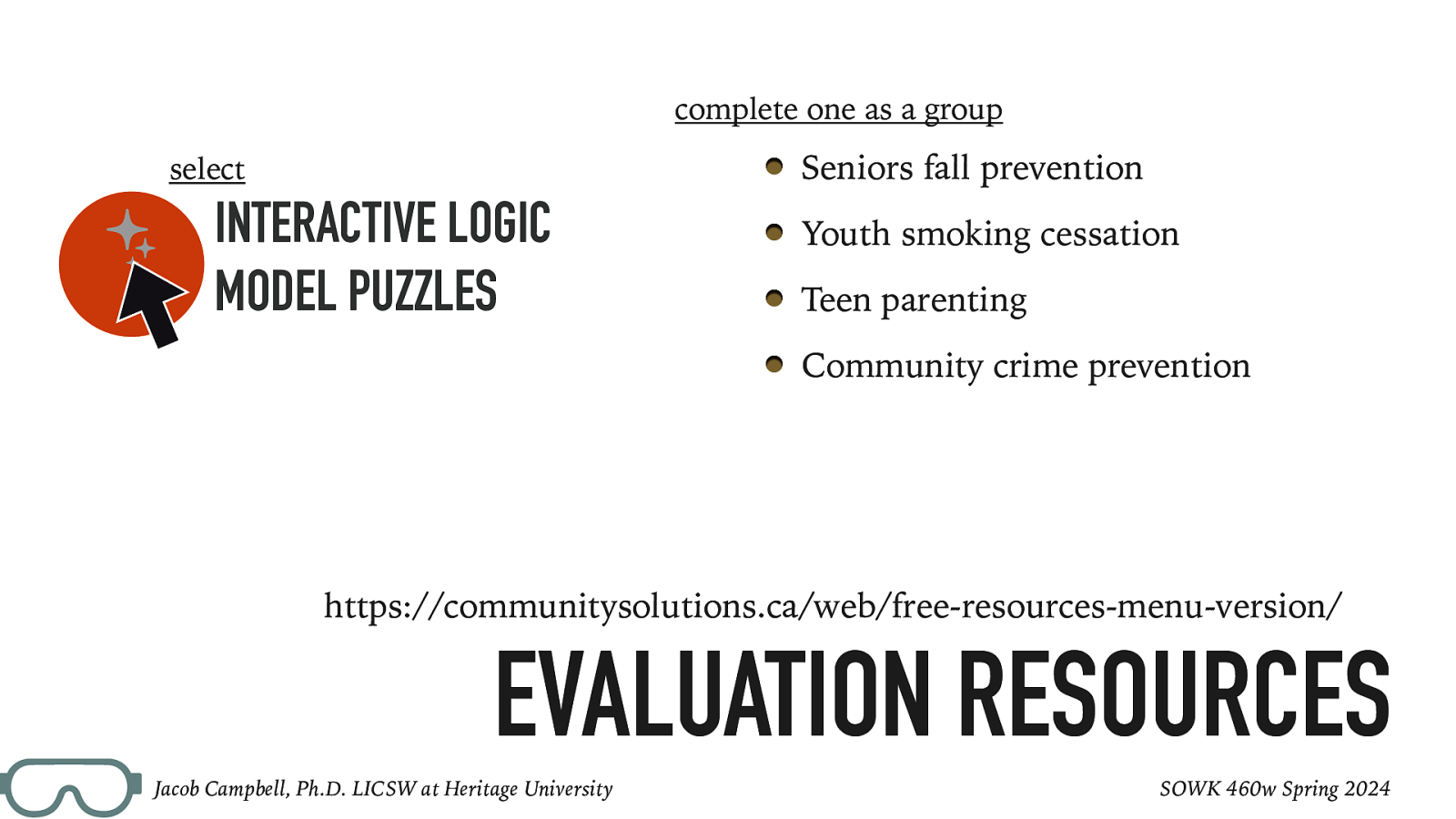

complete one as a group Seniors fall prevention select INTERACTIVE LOGIC MODEL PUZZLES Youth smoking cessation Teen parenting Community crime prevention https://communitysolutions.ca/web/free-resources-menu-version/ EVALUATION RESOURCES Jacob Campbell, Ph.D. LICSW at Heritage University SOWK 460w Spring 2024

Slide 12

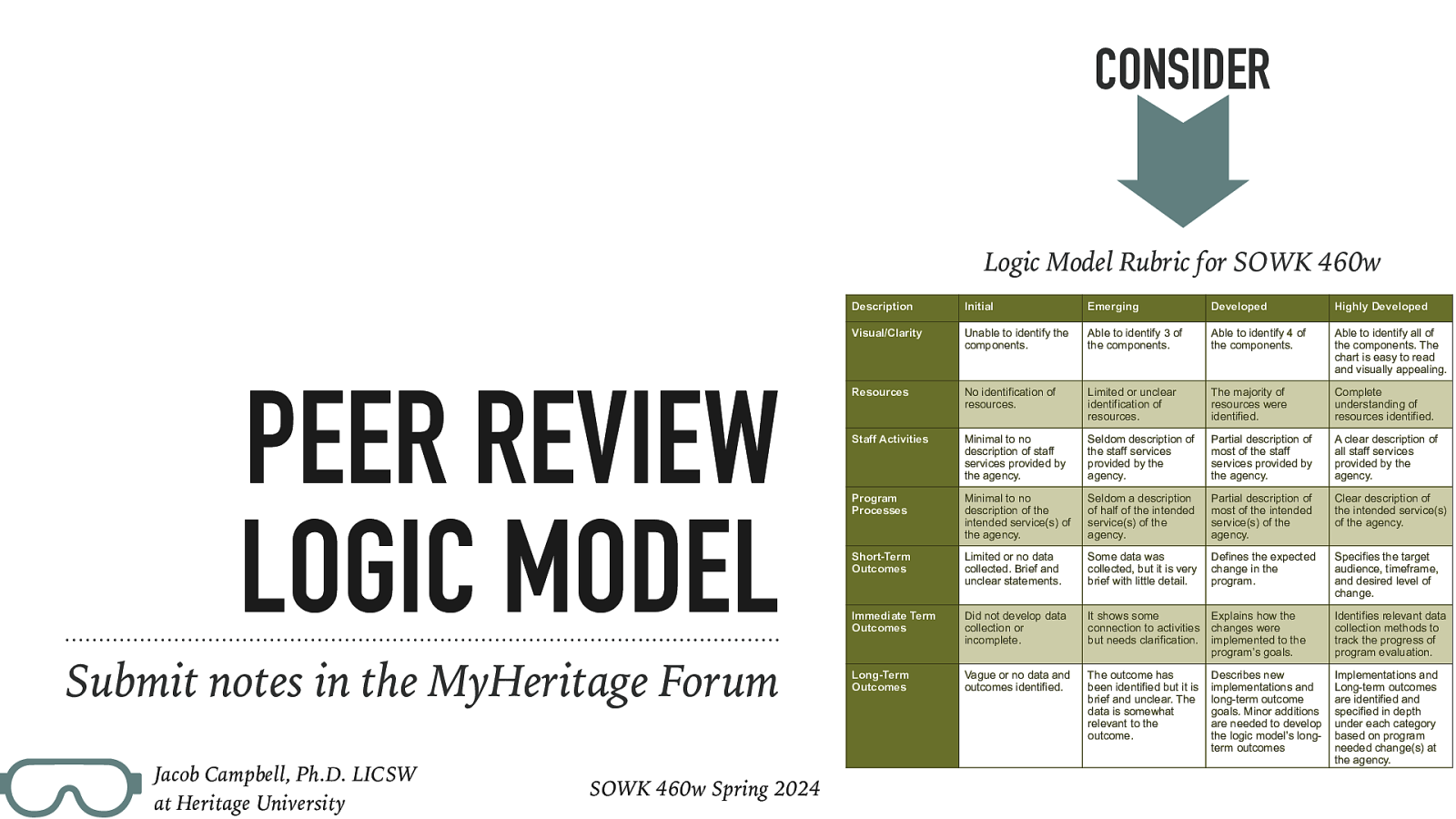

CONSIDER Logic Model Rubric for SOWK 460w PEER REVIEW LOGIC MODEL Submit notes in the MyHeritage Forum Jacob Campbell, Ph.D. LICSW at Heritage University SOWK 460w Spring 2024 Description Initial Emerging Developed Highly Developed Visual/Clarity Unable to identify the components. Able to identify 3 of the components. Able to identify 4 of the components. Able to identify all of the components. The chart is easy to read and visually appealing. Resources No identification of resources. Limited or unclear identification of resources. The majority of resources were identified. Complete understanding of resources identified. Staff Activities Minimal to no description of staff services provided by the agency. Seldom description of the staff services provided by the agency. Partial description of most of the staff services provided by the agency. A clear description of all staff services provided by the agency. Program Processes Minimal to no description of the intended service(s) of the agency. Seldom a description of half of the intended service(s) of the agency. Partial description of most of the intended service(s) of the agency. Clear description of the intended service(s) of the agency. Short-Term Outcomes Limited or no data collected. Brief and unclear statements. Some data was collected, but it is very brief with little detail. Defines the expected change in the program. Specifies the target audience, timeframe, and desired level of change. Immediate Term Outcomes Did not develop data collection or incomplete. It shows some connection to activities but needs clarification. Explains how the changes were implemented to the program’s goals. Identifies relevant data collection methods to track the progress of program evaluation. Long-Term Outcomes Vague or no data and outcomes identified. The outcome has been identified but it is brief and unclear. The data is somewhat relevant to the outcome. Describes new implementations and long-term outcome goals. Minor additions are needed to develop the logic model’s longterm outcomes Implementations and Long-term outcomes are identified and specified in depth under each category based on program needed change(s) at the agency.

Slide 13

EVALUATION DESIGN Method for Collecting Data Jacob Campbell, Ph.D. LICSW at Heritage University SOWK 460w Spring 2024

Slide 14

SOURCES OF INFORMATION ➤ Questionnaires, surveys, checklists ➤ Interviews ➤ Observations ➤ Focus groups ➤ Existing data (systematically gathered data, case les, treatment documentation, etc.) ➤ Controlled experiments SOWK 460w Spring 2024 fi Jacob Campbell, Ph.D. LICSW at Heritage University

Slide 15

Many of you are planning on using a survey as a part of your program evaluation. Working in your groups, spend time reviewing the CDC’s Tip Sheet and talking about potential questions. COMMON PITFALLS IN SURVEY QUESTIONS Doublebarreled questions Introducing bias Balanced question and response Negative items SURVEY QUESTIONS https://www.cdc.gov/dhdsp/docs/constructing_survey_questions_tip_sheet.pdf Jacob Campbell, Ph.D. LICSW at Heritage University SOWK 460w Spring 2024

Slide 16

Qualitative Designs and Applications Consumer Satisfaction 4 out of 5 Stars COMING LATER THIS SEMESTER… OTHER DESIGN CHOICES Jacob Campbell, Ph.D. LICSW at Heritage University SOWK 460w Spring 2024

Slide 17

(Kapp & Anderson, 2010) METHODS FOR EVALUATION Jacob Campbell, Ph.D. LICSW at Heritage University ➤ Sample selection ➤ Data collection ➤ Analysis ➤ Reporting SOWK 460w Spring 2024

Slide 18

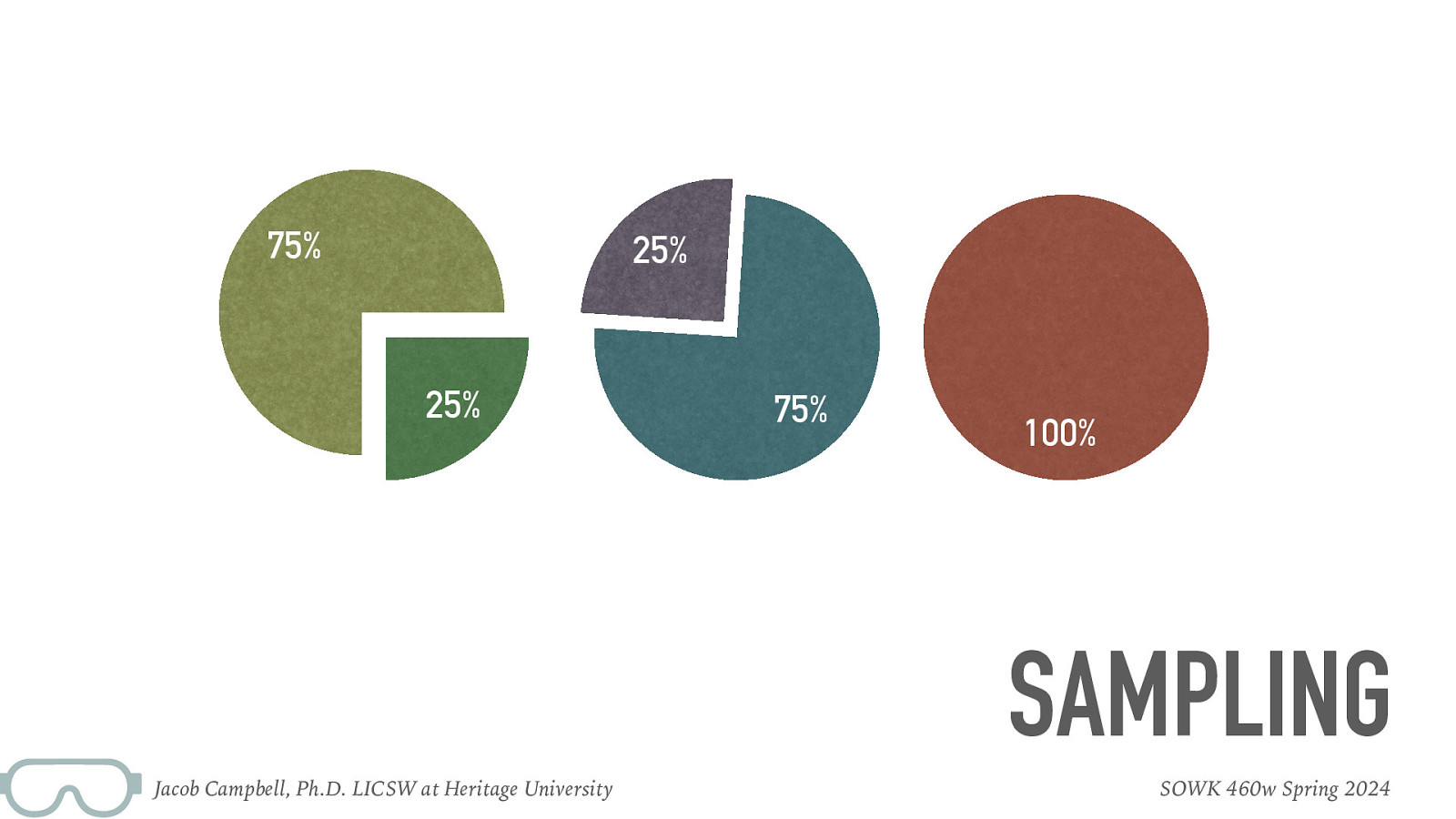

75% 25% 25% 75% 100% SAMPLING Jacob Campbell, Ph.D. LICSW at Heritage University SOWK 460w Spring 2024

Slide 19

VALIDITY How to Address Internal Validity Photo by Jen Theodore on Unsplash Jacob Campbell, Ph.D. LICSW at Heritage University SOWK 460w Spring 2024

Slide 20

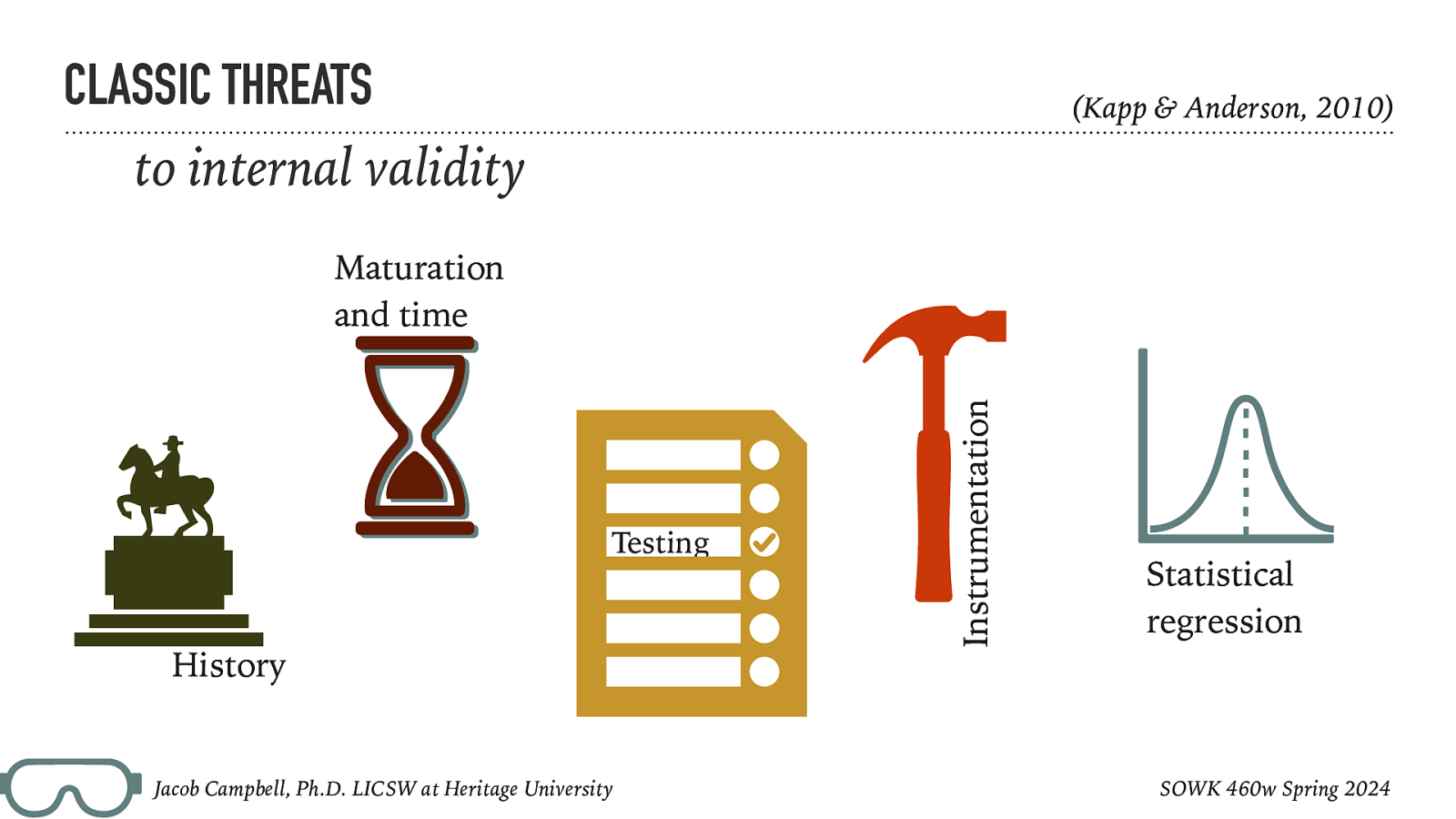

CLASSIC THREATS (Kapp & Anderson, 2010) to internal validity Testing History Jacob Campbell, Ph.D. LICSW at Heritage University Instrumentation Maturation and time Statistical regression SOWK 460w Spring 2024

Slide 21

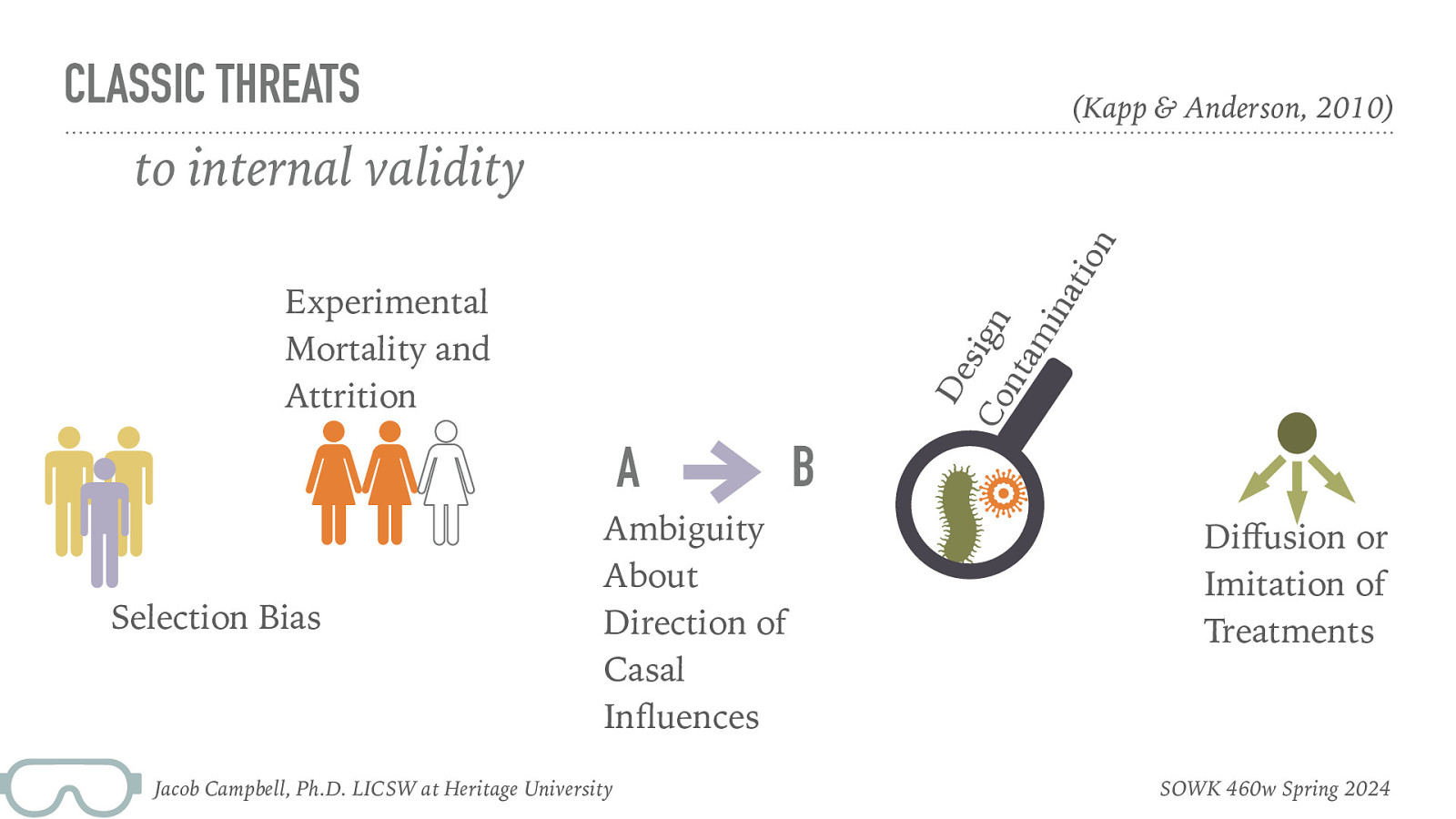

CLASSIC THREATS (Kapp & Anderson, 2010) tio n to internal validity De s Co ign nt am ina Experimental Mortality and Attrition A Selection Bias Ambiguity About Direction of Casal In uences ff fl Jacob Campbell, Ph.D. LICSW at Heritage University B Di usion or Imitation of Treatments SOWK 460w Spring 2024

Slide 22

CLASSIC THREATS (Kapp & Anderson, 2010) to internal validity A Jacob Campbell, Ph.D. LICSW at Heritage University B Interaction Effects SOWK 460w Spring 2024

Slide 23

COMPONENTS OF DESIGN (Kapp & Anderson, 2010) what should be included in general ➤ Defining and describing the intervention or ➤ program elements to be evaluated ➤ Establishing the time order of the independent and dependent variables ➤ Controlling for rival hypotheses ➤ Using at least one control group ➤ Assigning the person who are subjects in a independent variable ➤ Establishing the relationship between the Manipulating the independent variable random manner Jacob Campbell, Ph.D. LICSW at Heritage University SOWK 460w Spring 2024

Slide 24

PRE-TEST / POST-TEST Design Methods Activity Before Intervention After Intervention Intervention Working in small groups, what would you create as a pre-test / post-test Jacob Campbell, Ph.D. LICSW at Heritage University SOWK 460w Spring 2024

Slide 25

➤ Case study approach ➤ One group post-test design ➤ One-group pre-test and post-test ➤ Post-test only with nonequivalent groups ➤ Experimental design ➤ Matched comparison groups TYPES OF GROUP DESIGNS what should be included in general Jacob Campbell, Ph.D. LICSW at Heritage University (Kapp & Anderson, 2010) SOWK 460w Spring 2024

Slide 26

TYPES OF GROUP DESIGNS planning in your groups ➤ Are you going to use a group design for your program evaluation or what method will you be using? ➤ What type of group design method are you going to use? ➤ What are the challenges that you think you will encounter Jacob Campbell, Ph.D. LICSW at Heritage University SOWK 460w Spring 2024

Slide 27

DESCRIPTION The group in which an intervention has been introduced is the focus of the study. It will chronicle the progress and process of the group, describing the changes (or lack of change) after the introduction of the intervention. STRENGTHS ➤ ➤ ➤ LIMITATIONS Detailed exploration ➤ No comparison group Ability to understand complexity ➤ Case may not have same qualities as sample Rich narrative ➤ Di cult to weigh elements of narrative CASE STUDY APPROACH ffi Jacob Campbell, Ph.D. LICSW at Heritage University (Kapp & Anderson, 2010) SOWK 460w Spring 2024

Slide 28

DESCRIPTION This design involves the implementation of an intervention with a group of people whom that intervention with a group of people for whom that intervention was designed, and then the administration of a simple test or other measurement to ascertain the results of that intervention. This can be described as an A-B design, with A being the pre-intervention status and B representing the post-intervention status STRENGTHS ➤ Design is simple and practical ➤ Intervention is intended to increase positive outcome ➤ Intervention delivered and measured LIMITATIONS There are concerns about the validity of the ndings, the validity of the measurement instrument, and consequently, the inability to present the e ectiveness of the intervention with a high degree of con dence ONE GROUP POST-TEST ONLY DESIGN fi fi ff Jacob Campbell, Ph.D. LICSW at Heritage University (Kapp & Anderson, 2010) SOWK 460w Spring 2024

Slide 29

DESCRIPTION A target group is assessed prior to the intervention and after the intervention they are assessed again using the same measurement tool. It is designed to measure the change that was presumably caused by the intervention. STRENGTHS ➤ ➤ LIMITATIONS Can show comparison between before and after the intervention ➤ Threats to internal validity ➤ Historical considerations Progress is likely attributable in part to the intervention ➤ Maturation ➤ Testing and instrumentation ONE-GROUP PRE-TEST & POST-TEST DESIGN Jacob Campbell, Ph.D. LICSW at Heritage University (Kapp & Anderson, 2010) SOWK 460w Spring 2024

Slide 30

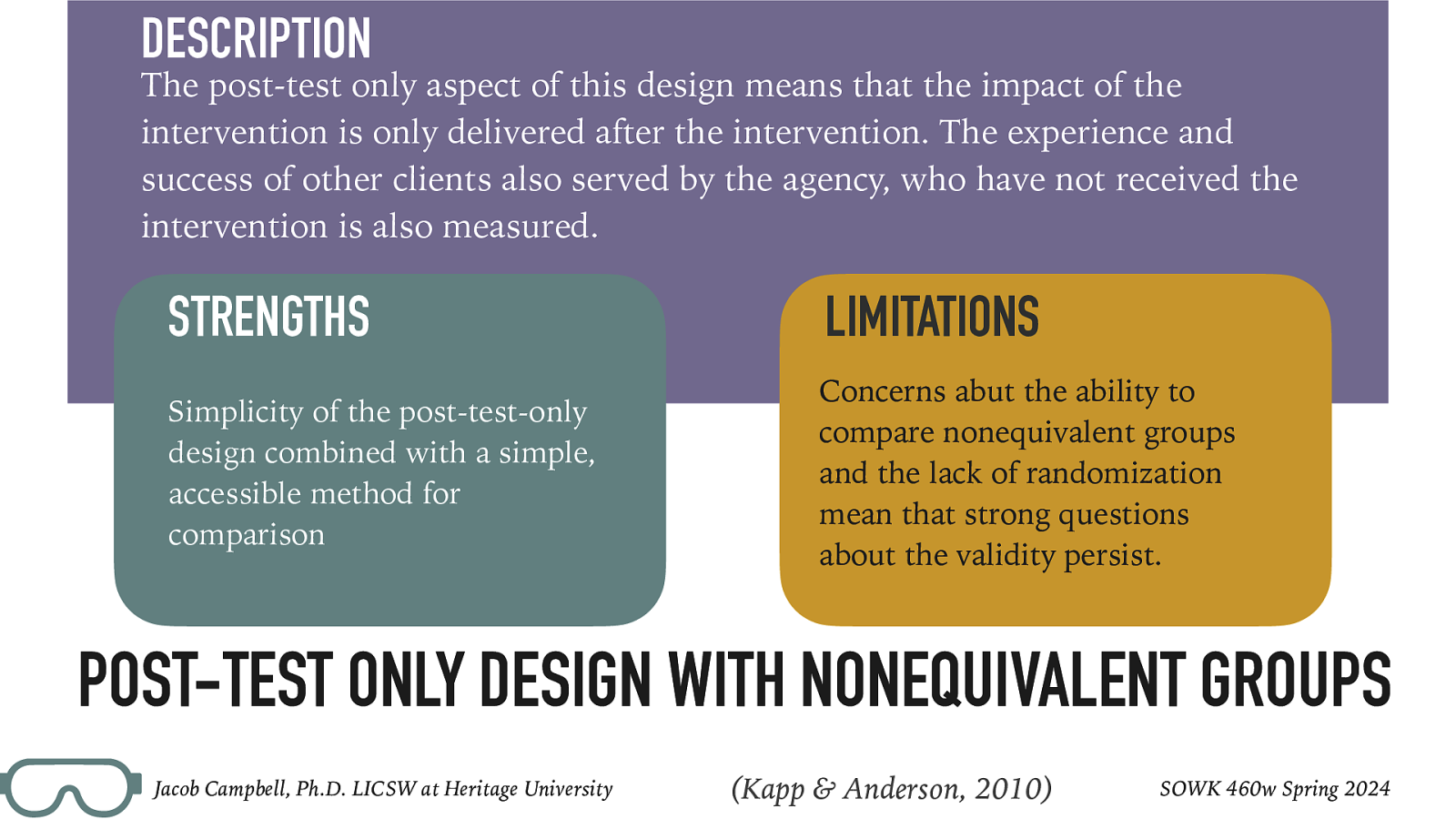

DESCRIPTION The post-test only aspect of this design means that the impact of the intervention is only delivered after the intervention. The experience and success of other clients also served by the agency, who have not received the intervention is also measured. STRENGTHS LIMITATIONS Simplicity of the post-test-only design combined with a simple, accessible method for comparison Concerns abut the ability to compare nonequivalent groups and the lack of randomization mean that strong questions about the validity persist. POST-TEST ONLY DESIGN WITH NONEQUIVALENT GROUPS Jacob Campbell, Ph.D. LICSW at Heritage University (Kapp & Anderson, 2010) SOWK 460w Spring 2024

Slide 31

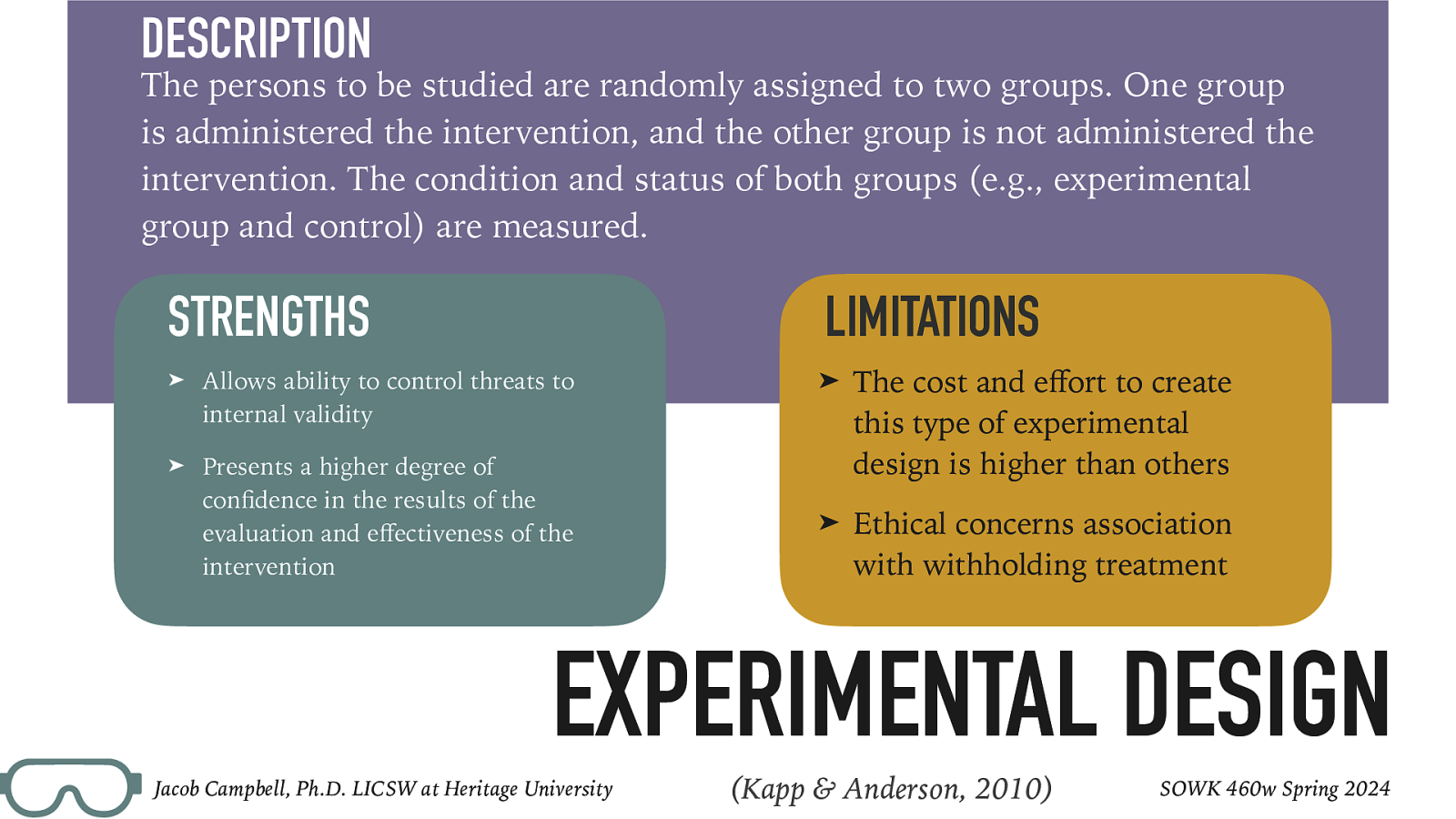

DESCRIPTION The persons to be studied are randomly assigned to two groups. One group is administered the intervention, and the other group is not administered the intervention. The condition and status of both groups (e.g., experimental group and control) are measured. STRENGTHS LIMITATIONS ➤ Allows ability to control threats to internal validity ➤ Presents a higher degree of con dence in the results of the evaluation and e ectiveness of the intervention ➤ The cost and e ort to create this type of experimental design is higher than others ➤ Ethical concerns association with withholding treatment EXPERIMENTAL DESIGN ff ff fi Jacob Campbell, Ph.D. LICSW at Heritage University (Kapp & Anderson, 2010) SOWK 460w Spring 2024

Slide 32

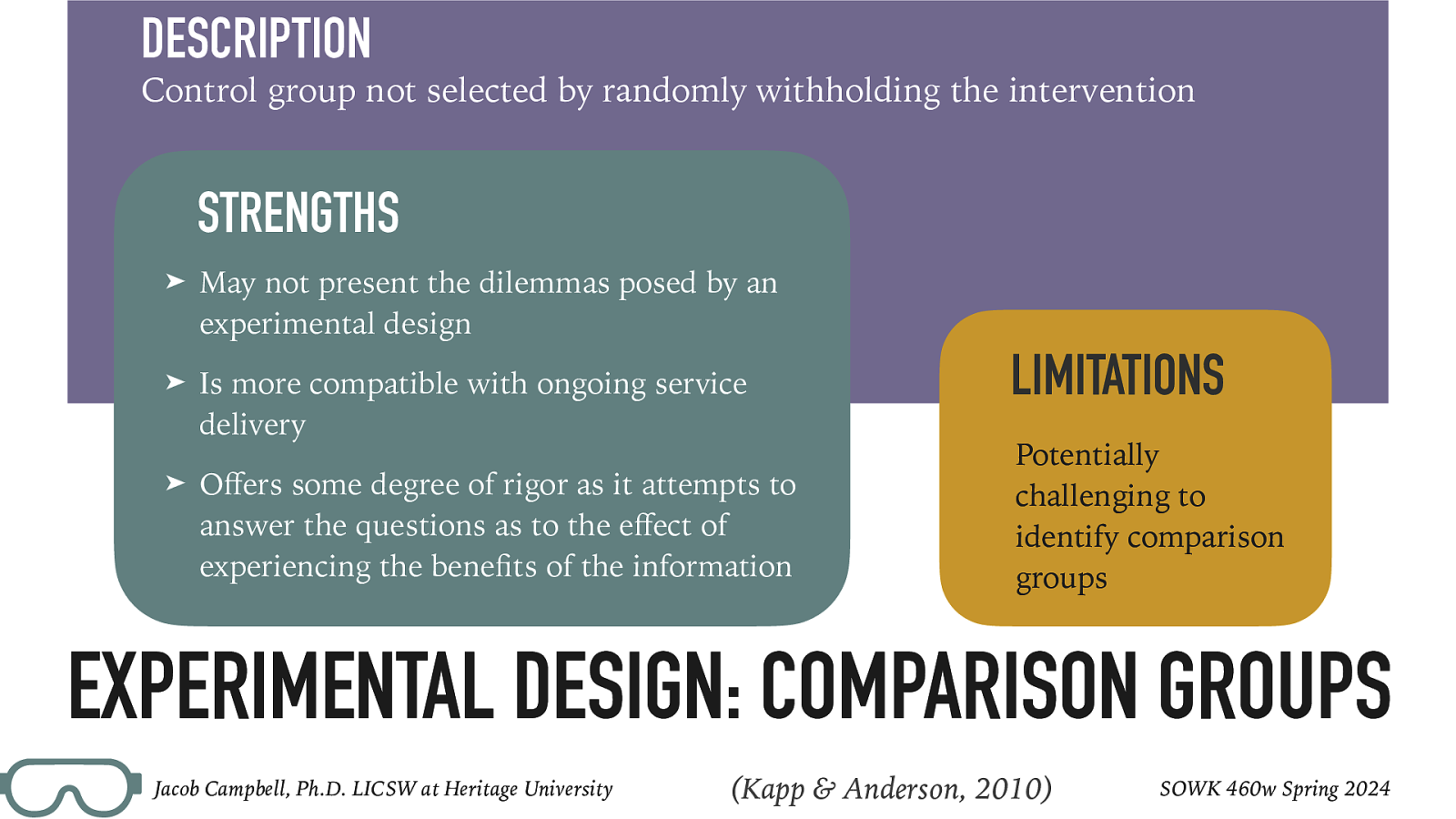

DESCRIPTION Control group not selected by randomly withholding the intervention STRENGTHS ➤ May not present the dilemmas posed by an experimental design ➤ Is more compatible with ongoing service delivery ➤ O ers some degree of rigor as it attempts to answer the questions as to the e ect of experiencing the bene ts of the information LIMITATIONS Potentially challenging to identify comparison groups EXPERIMENTAL DESIGN: COMPARISON GROUPS ff fi ff Jacob Campbell, Ph.D. LICSW at Heritage University (Kapp & Anderson, 2010) SOWK 460w Spring 2024

Slide 33

GROUP WORK PLAN Check in Jacob Campbell, Ph.D. LICSW at Heritage University SOWK 460w Spring 2024